Researchers analyzing several major pathology AI models for cancer diagnosis discovered inconsistent performance based on patients’ self-reported gender, race, and age. They proposed several potential reasons for this demographic bias.

To address this issue, the team created a framework called FAIR-Path, which effectively reduced bias in the models. Senior author Kun-Hsing Yu, an associate professor of biomedical informatics at the Blavatnik Institute and an assistant professor of pathology at Brigham and Women’s Hospital, emphasized the unexpected nature of this bias in pathology AI, noting that reading demographics from pathology slides is typically seen as a challenging task for human pathologists.

Understanding and mitigating AI bias in medicine is crucial, as it can influence diagnostic accuracy and patient outcomes. The effectiveness of FAIR-Path showcases the possibility of enhancing the fairness of AI cancer pathology models and potentially other medical AI models with minimal effort.

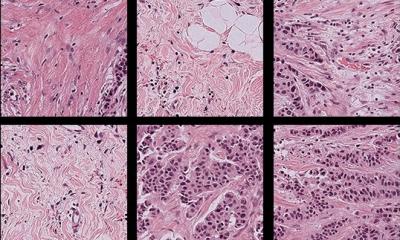

Yu and his team examined bias in four standard AI pathology models designed for cancer evaluation. These deep-learning models were trained on sets of annotated pathology slides, allowing them to identify biological patterns for diagnosing new slides. The researchers used a comprehensive repository of pathology slides covering 20 cancer types.

Their findings revealed that all four models exhibited biased performance, rendering less accurate diagnoses for specific groups based on self-reported demographics. For instance, the models had difficulty distinguishing lung cancer subtypes in African American and male patients, as well as breast cancer subtypes in younger patients. They also struggled to detect various cancer types, including breast, renal, thyroid, and stomach cancers, in certain demographic groups. Approximately 29% of diagnostic tasks faced such disparities. This inaccuracy arises because the models extract demographic information from the slides and depend on demographic-specific patterns for diagnoses.

The results were surprising, as pathology evaluation is generally expected to be objective. The researchers questioned why pathology AI did not exhibit the same level of objectivity. They identified three primary factors. First, the AI models were trained on unequal sample sizes due to accessibility issues with certain demographic groups, leading to less accurate diagnoses in underrepresented samples. However, they found that the problem was more complex than just sample sizes. The models also performed poorly in one demographic group even when sample sizes were similar. Further analysis suggested that differential disease incidence could be a contributing factor; certain cancers are more prevalent among specific groups, allowing models to more accurately diagnose those cancers, while they struggle in populations where the incidence is lower.

—